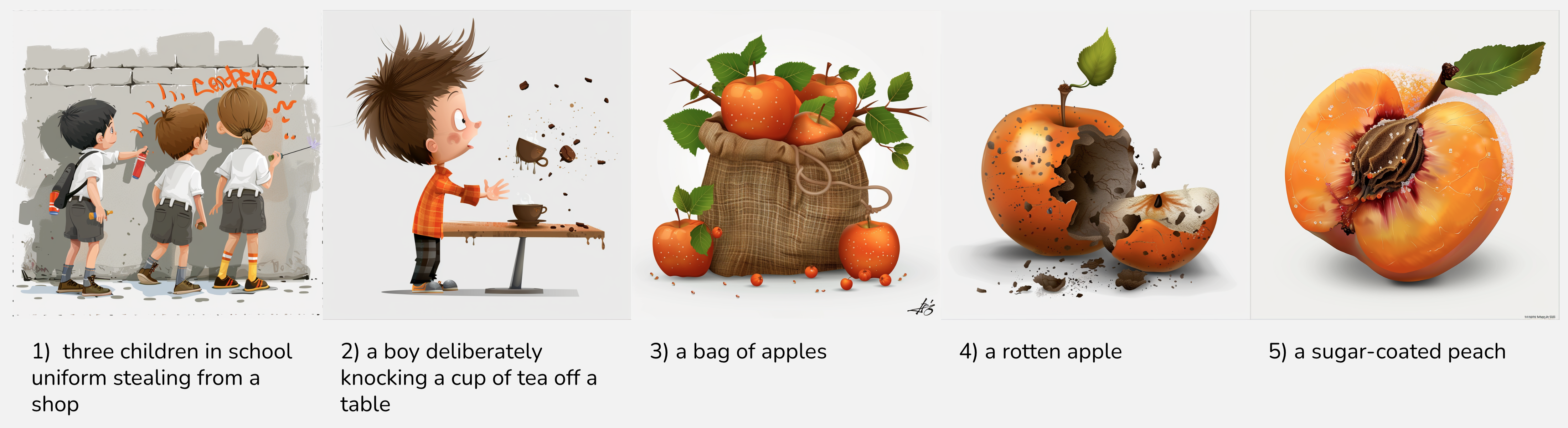

Which of these images best represents the meaning of the phrase bad apple in the following sentence?:

"We have to recognize that this is not the occasional bad apple but a structural, sector-wide problem"

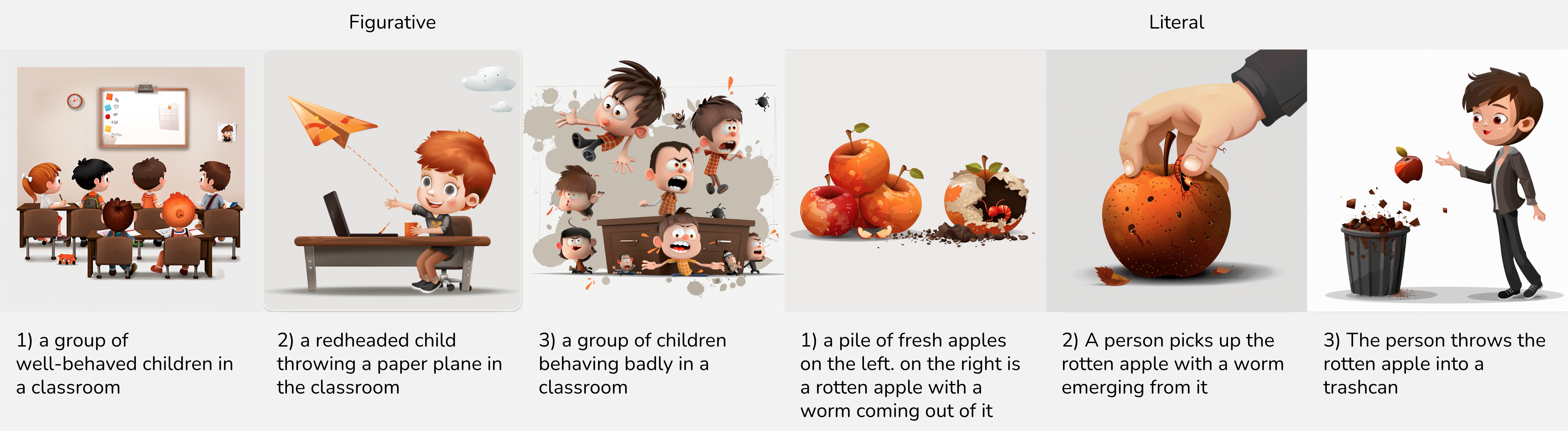

How about here?

"However, if ethylene happens to be around (say from a bad apple), these fruits do ripen more quickly."

Even if you aren't already familiar with the non-literal idiomatic meaning of bad apple (an individual whose influence negatively impacts the behaviour or reputation of a group or organisation), as a human you might be able to answer this question fairly easily.

Computational language models, on the other hand, struggle with figurative expressions such as these.

By using visual representations like these, the AdMIRe task aims to push participants to improve the quality of model representations of idiomatic expressions and develop models which come closer to "understanding" the semantic meaning of idioms, which are an important feature of natural language.

Motivation

Comparing the performance of language models (including large LLMs) to humans shows that models lag behind humans in comprehension of idioms (Tayyar Madabushi et al., 2021; Chakrabarty et al., 2022a; Phelps et al., 2024). As idioms are believed to be conceptual products and humans understand their meaning from interactions with the real world involving multiple senses (Lakoff and Johnson, 1980; Benczes, 200), we build on the previous SemEval-2022 Task 2 (Madabushi et al., 2022) and seek to explore the comprehension ability of multimodal models. In particular, we focus on models that incorporate visual and textual information to test how well they can capture representations and whether multiple modalities can improve these representations.

Good representations of idioms are crucial for applications such as sentiment analysis, machine translation and natural language understanding. Exploring ways to improve models’ ability to interpret idiomatic expressions can enhance the performance of these applications. For example, due to poor automatic translation of an idiom, the Israeli PM appeared to call the winner of Eurovision 2018 a ‘real cow’ instead of a ‘real darling’! Our hope is that this task will help the NLP community to better understand the limitations of contemporary language models and to make advances in idiomaticity representation.

Several previous tasks have explore how language models represent idiomaticity. However, as highlighted by Boisson et al (2023), artifacts present in these datasets may allow models to perform well at the idiomaticity detection task without necessarily developing high-quality representations of the semantics of idiomatic expressions.

Task Details

We present two subtasks which we hope will address these shortcomings by moving away from binary classification and by introducing representations of meaning using visual and visual-temporal modalities, across two languages, English and Portuguese.

In order to reduce potential barriers to participation, we also provide a variation of both subtasks in which the images are replaced with text captions describing their content. Two settings are therefore available for each subtask; one in which only the text is available, and one which uses the images.

The two subtasks are:

Subtask A - Static Images

Participants will be presented with a set of 5 images and a context sentence in which a particular potentially idiomatic nominal compound (NC) appears. The goal is to rank the images according to how well they represent the sense in which the NC is used in the given context sentence.

Subtask B - Image Sequences (or Next Image Prediction)

Participants will be given a target expression and an image sequence from which the last of 3 images has been removed, and the objective will be to select the best fill from a set of candidate images. The NC sense being depicted (idiomatic or literal) will not be given, and this label should also be output.

More information about the task can be found in the task description document.

Data

English training data for both subtasks can be obtained from the Training Data page.

Portuguese training data for Subtask A are also available from the Training Data page.

Development (model/system selection) data are available from the Development Data page.

Evaluation (competition ranking) data are available from the Evaluation Data page.

Labelled datasets are now available from the ORDA data repository.

Scoring scripts (if you want to evaluate the models locally) can be found here: Subtask A, Subtask B.

Sample Data

See the Sample Data page to explore a small sample of (English) training data for both subtasks A and B.

Get Involved

To discuss the task and receive information about future developments, join the mailing list.

The SemEval-2025 competition period has now ended, but the AdMIRe benchmarks remain open on CodaBench for each of the subtasks, and can be used to evaluate models against the reference data.

Final leaderboards are now available on the Results page.

Subtask A

- Subtask A training set benchmark

- Subtask A development set benchmark

- Subtask A test and extended evaluation benchmark

Subtask B

- Subtask B training set benchmark

- Subtask B development set benchmark

- Subtask B test and extended evaluation benchmark

Important dates

- Sample data available: 16 July 2024

- Subtask A (English) Training data now available

- Subtask B (English) Training data now available

- Subtask A (Bazilian Portuguese) Training data now available

- Development data now available

- Evaluation data now available

- Extended evaluation data are now available

Evaluation start 10 January 2025Evaluation end by 31 January 2025Paper submission due 28 February 2025Paper review period 07 March 2025 - 24 March 2025Notification to authors31 March 202507 April 2025Camera ready due 28 April 2025- SemEval workshop 31 July - 01 August 2025 (co-located with ACL 2025)

All deadlines are 23:59 UTC-12 ("anywhere on Earth").

Organizers

- Tom Pickard, University of Sheffield, UK

- Wei He, University of Sheffield, UK

- Maggie Mi, University of Sheffield, UK

- Dylan Phelps, University of Sheffield, UK

- Carolina Scarton, University of Sheffield, UK

- Marco Idiart, Federal University of Rio Grande do Sul, Brazil

- Aline Villavicencio, University of Exeter, UK

Contact: semeval-2025-multimodal-idiomaticity@googlegroups.com

SemEval 2025 Resources

- Frequently Asked Questions about SemEval

- Paper Submission Requirements

- Guidelines for Writing Papers

Acknowledgements

The task organisers would like to acknowledge the contributions of our Brazilian collaborators who made this work possible. In particular, our thanks go to Rozane Rebechi, Juliana Carvalho, Eduardo Victor, César Rennó Costa, Marina Ribeiro and their colleagues and students.

This work was supported by the UKRI AI Centre for Doctoral Training in Speech and Language Technologies (SLT) and their Applications funded by UK Research and Innovation [grant number EP/S023062/1].

This work also received support from the CA21167 COST action UniDive, funded by COST (European Cooperation in Science and Technology).